Robots Txt 7.x-1.4

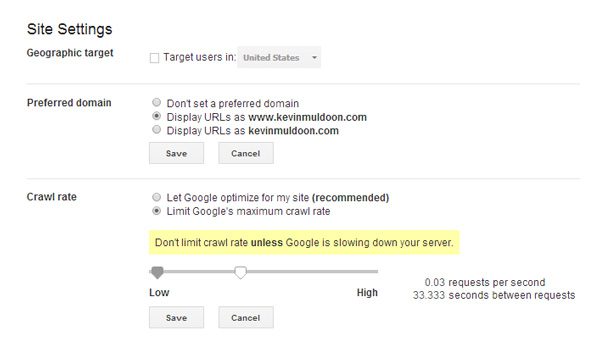

Multiple domains running on the same server. The best solution is using the robots meta tag on the page itself.

My Robots Txt Shows User Agent Disallow What Does It Mean Quora

023 Added A robotstxt parser that identifies Sitemap.

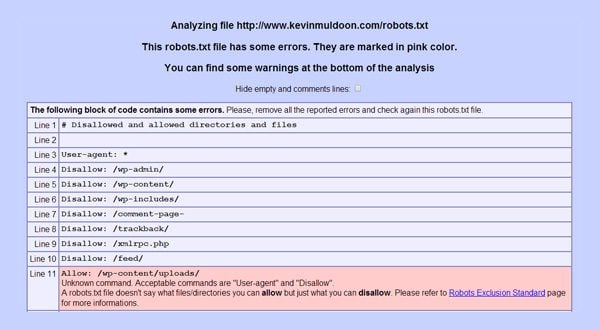

Robots txt 7.x-1.4. Learn what is robotstxt vs Meta Robot file of a website and why any particular post or pages or content not index in Google. The ideal is for robotstxt to be available at robotstxt as specified in the standard. Following the robotstxt specification you cant disallow indexing nor following links with robotstxt.

11 What Is PetalBot. Clicking this will now pull data from the robotstxt file included in the module directory instead of returning nothing effectively setting robotstxt to a blank file. Jan 14 13 at 2037.

Robots are often used by search engines to categorize websites. If youre not sure which to choose learn more about installing packages. 16 Why My Website Can Still Be Found in Petal When It Has Been Already Added to robotstxt.

First create a new template called robotstxt file in your apps template folder the same directory as all your HTML templates. In CS-Cart and Multi-Vendor you can edit these instructions right in the Administration panel. It should also be clarified that robotstxt does not stop Google indexing pages but stops it reading their content.

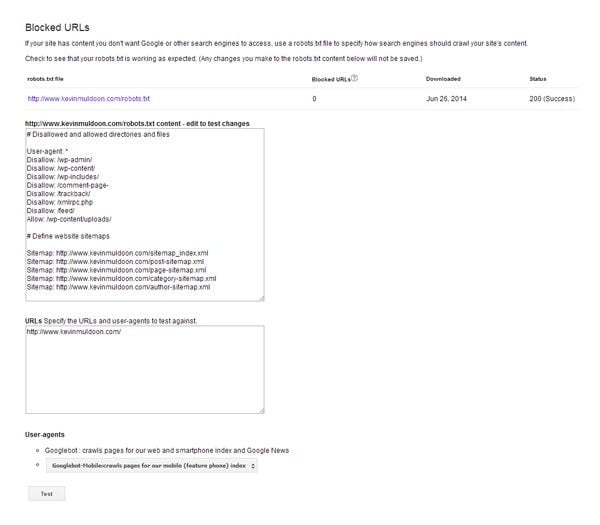

If you do not have an existing Robotstxt then one will be created for you. Adding the Disallow rules in the robotstxt file would address other search engines as well. It allows a website to provide instructions for search engine bots hence the name robotstxt.

Be sure to name it correctly using only lowercase letters. Google is now recognising the site as a site test has shown however it doesnt rank well for anything. View usage statistics for this release.

Prisma informatik has developed the NAVdiscovery Toolbox specifically for Users of Microsoft Dynamics NAV now Dynamics 365 Business Central QlikView and Qlik Sense and offers numerous other features. The preparation of the ERP data from Microsoft Dynamics NAV now Business Central is usually a laborious and time-consuming task for users. V147 Being Replaced with.

022 Fixed Support for URLs up to 10000 characters long. When they were ready they removed the robotstxt by clicking the allow Search Engines to crawl this site button. Read more about robotstxt 47x-1x-dev.

How to check the robotstxt fil. It took a month and a half for their blog to show in Search Engines once the robottxt was removed. Go to Blogger Dashboard and click on the settings option Scroll down to crawlers and indexing section Enable custom robotstxt by the switch button.

Robotstxt-webpack-plugin401 has 2 known vulnerabilities found in 2 vulnerable paths. By robertDouglass on 12 March 2006. Learn more about robotstxt-webpack-plugin401 vulnerabilities.

A Nuxtjs module thats inject a middleware to generate a robotstxt file. This is one of the reasons why your robotstxt file should be used as minimally as possible and when it is used you should have a backup process in place such as the canonical or noindex tag on a page. The basic structure of the robotstxt file specifies the user agent a list of disallowed URL slugs followed by the sitemap URL.

Connecting QlikView Qlik Sense and Dynamics 365. The robots exclusion standard also known as the robots exclusion protocol or simply robotstxt is a standard used by websites to communicate with web crawlers and other web robotsThe standard specifies how to inform the web robot about which areas of the website should not be processed or scanned. 1 Reply Last reply Reply Quote 1.

Modern webservers such as Apache can log accesses to a number of domains to one file - this can cause confusion when attempting to see what webserver was accessed at which point. It means noindex and nofollow. Seoug_2005 Daylan last edited by.

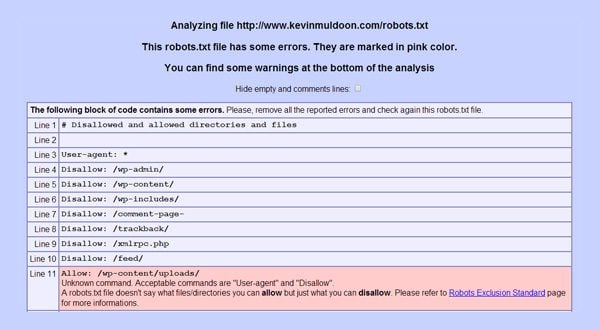

The editor contains basic validation for the Robotstxt protocol. New for Umbraco 8 Robotstxt Editor v8. The function of PetalBot is to access both PC and mobile websites and establish an index database which enables users to search the content of your site in Petal search.

PetalBot is an automatic program of the Petal search engine. The robotstxt file is a configuration file that you can add to your WordPress sites root directory. Noindex and nofollow means you do not want your site to crawl in search engine.

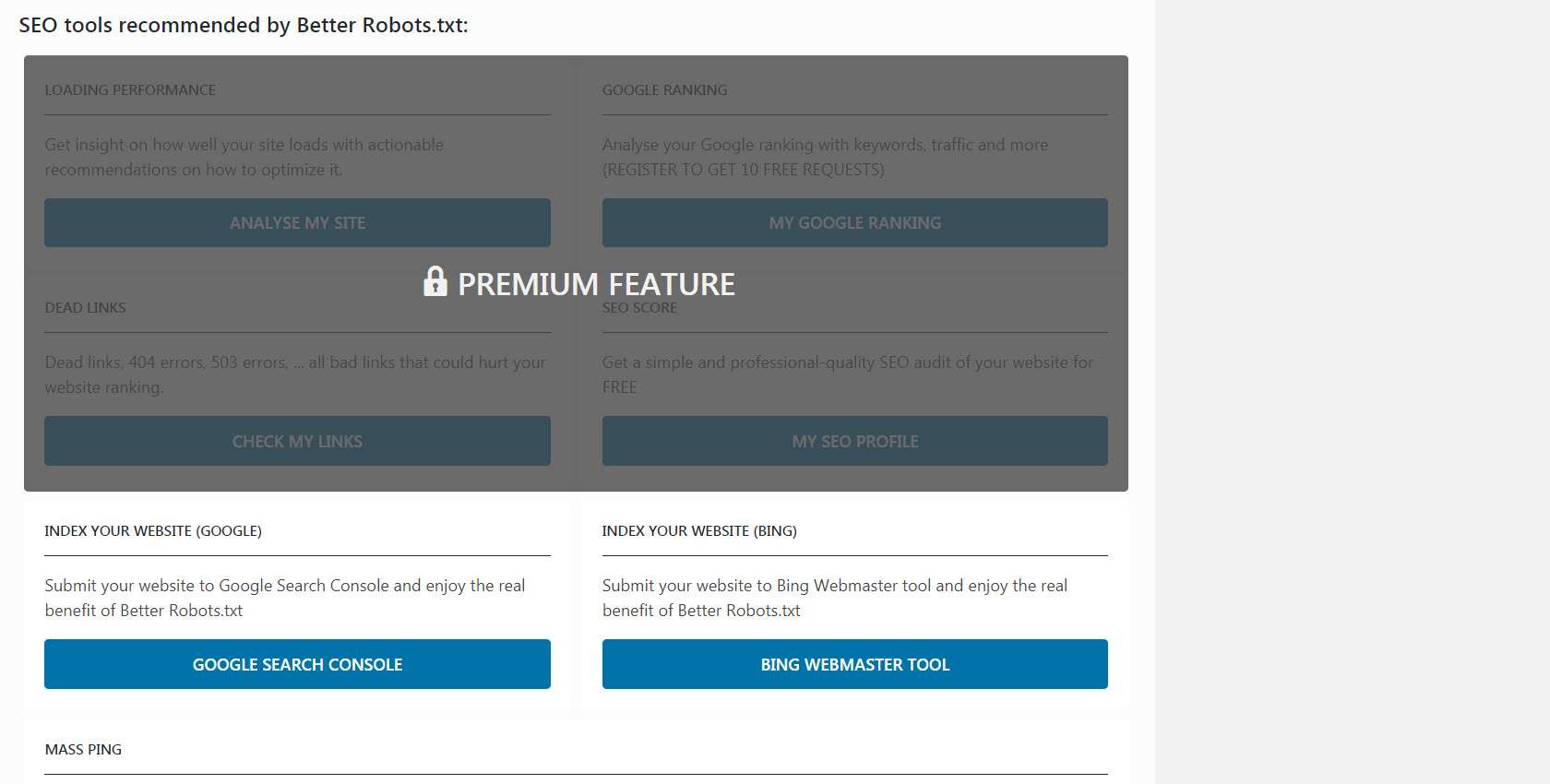

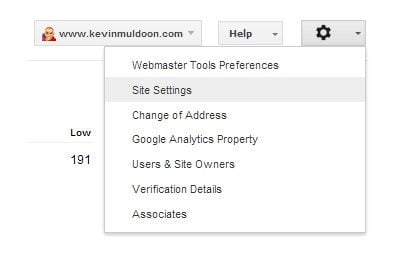

Files for robotstxt version. Adds a dashboard editor to the settings section which allows access to your Robotstxt file from the root of your website. There is a non-standard Noindex field which Google and likely no other consumer supported as experimental feature.

Add nuxtjsrobots dependency to your project. This required a new urlHash SHA1 field on the url table to support the. Click on custom robotstxt a window will open up paste the robotstxt file and update.

Fix Robotstxt checking to work for the first crawled URL. Download the file for your platform. Administrators use the robotstxt file to provide instructions for web crawlersThese instructions can be used to prevent search engines from indexing specific parts of your site.

There was a bug that caused robotstxt to be ignored if it wasnt in the cache.

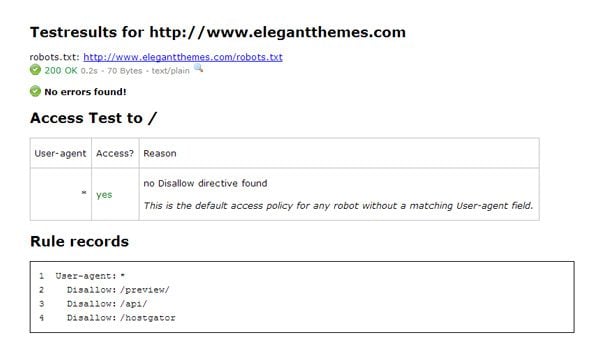

How To Create And Configure Your Robots Txt File Elegant Themes Blog

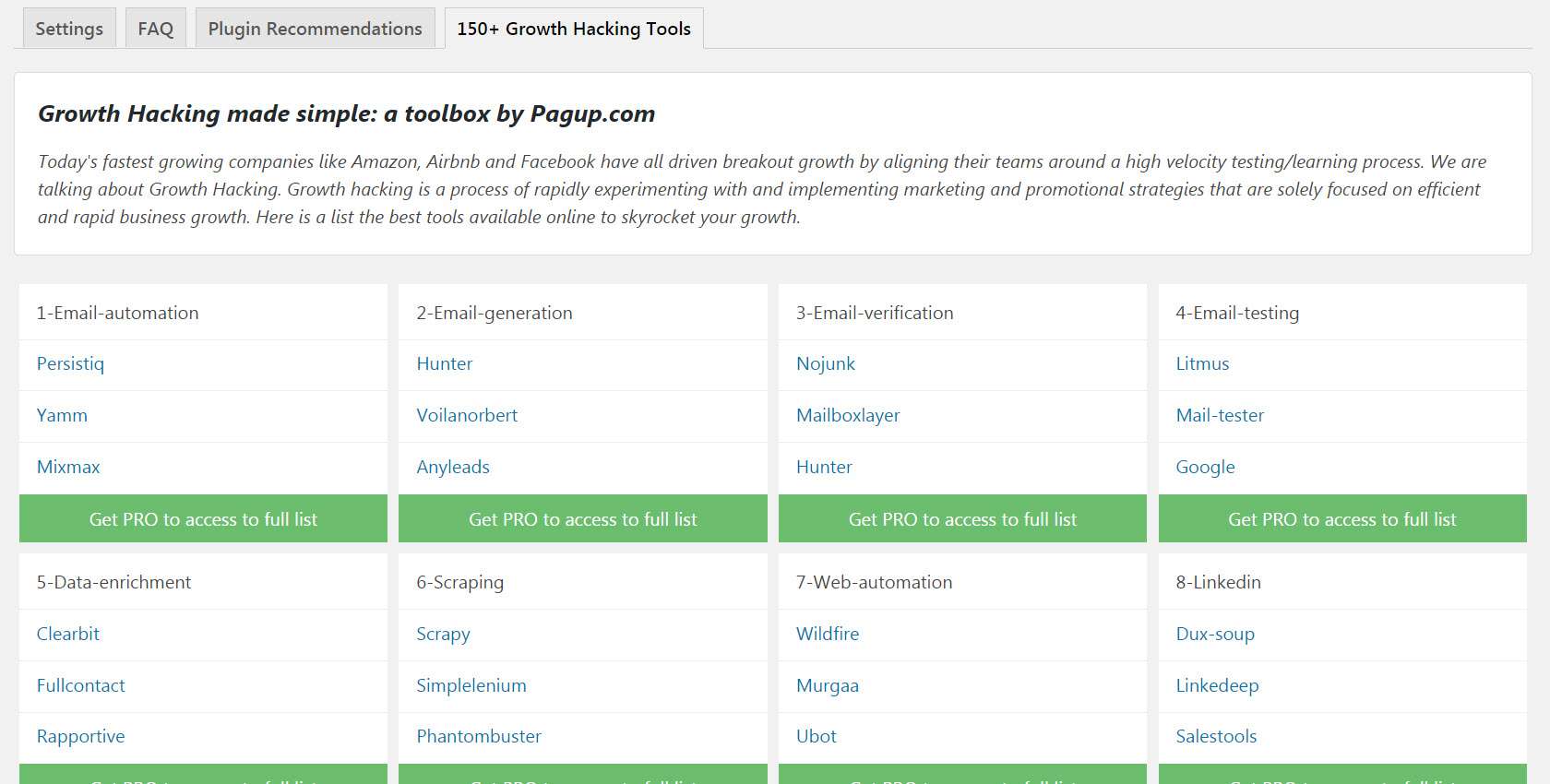

Wordpress Robots Txt Optimization Xml Sitemap Website Traffic Seo Ranking Booster Plugin For That

How To Create And Configure Your Robots Txt File Elegant Themes Blog

How To Create And Configure Your Robots Txt File Elegant Themes Blog

How To Create And Configure Your Robots Txt File Elegant Themes Blog

How To Create And Configure Your Robots Txt File Elegant Themes Blog

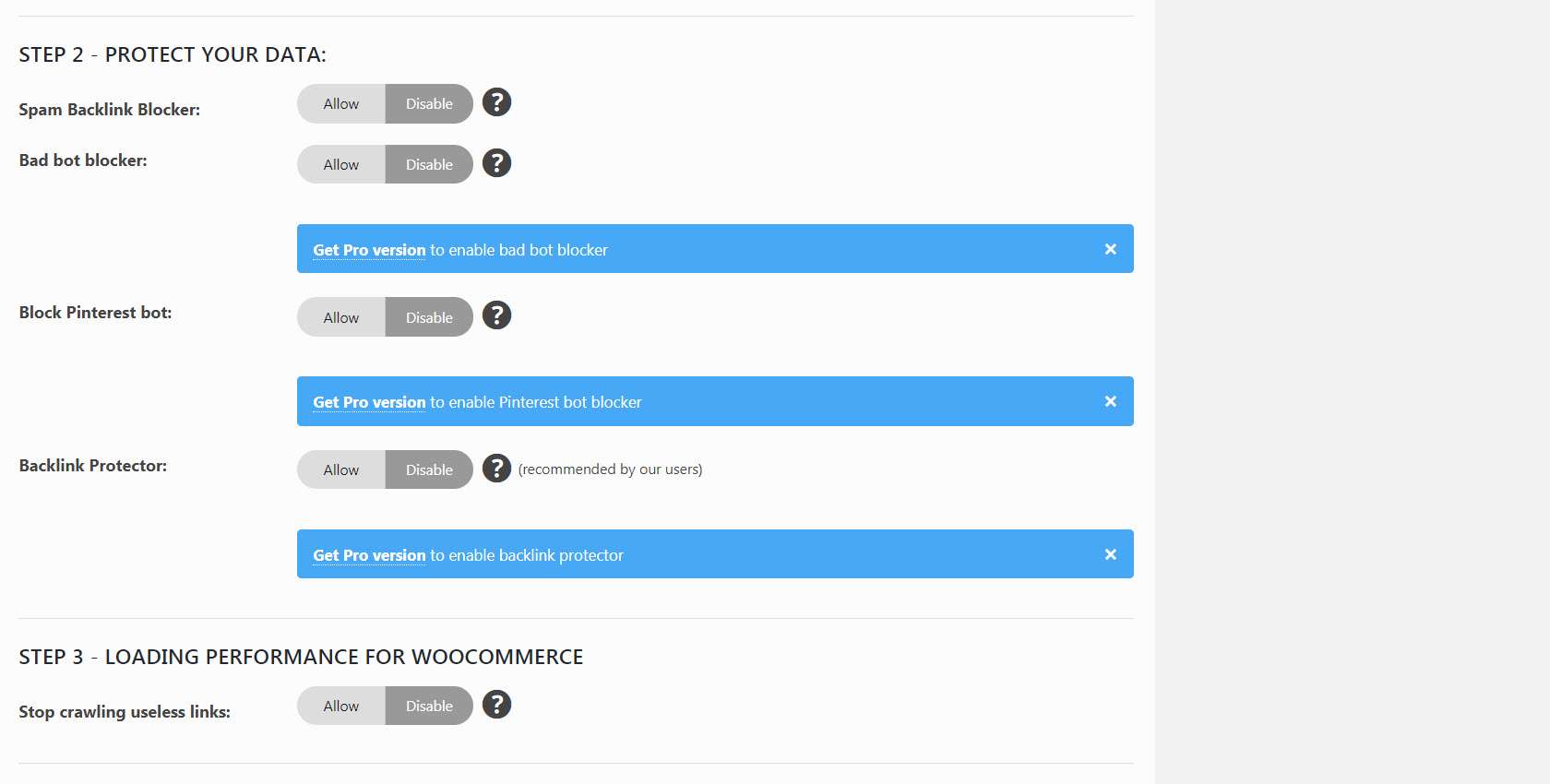

Wordpress Robots Txt Optimization Xml Sitemap Website Traffic Seo Ranking Booster Plugin For That

Wordpress Robots Txt Optimization Xml Sitemap Website Traffic Seo Ranking Booster Plugin For That

Prestashop Robots Txt Module Edit Your Robots Tx From Your Back Office

Consider Removing Old Sphinx Documentation Versions From Google Search Issue 8309 Sphinx Doc Sphinx Github

How To Create And Configure Your Robots Txt File Elegant Themes Blog

Posting Komentar untuk "Robots Txt 7.x-1.4"