Robots Txt R

Function guessing domain from path. It is a set of instructions or rules which the crawlers and search engine bots visiting your site follow.

Panduan Lengkap Tentang Cara Setting Robot Txt Di Wordpress

Think of a robotstxt file.

Robots txt r. Robotstxt is a text file webmasters create to instruct robots typically search engine robots how to crawl index pages on their website. Robotstxt is a text file which helps the botscrawlers of the search engines such as Google and Bing to crawl and index your site effectively. Storage for http request response objects get_robotstxts.

Method aslist for class robotstxt_text fix_url. We say that a search engine is about to visit a site. It tells website robots which pages not to crawl.

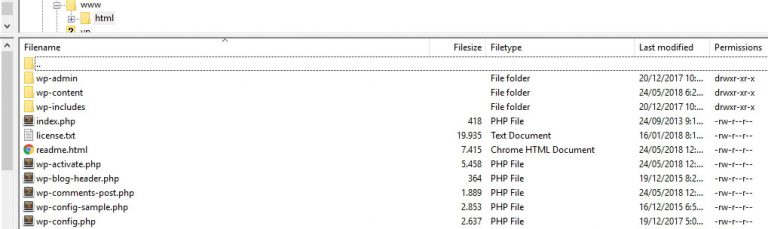

Screenshot of our Robotstxt file. This text file contains directives which dictate to search engines which pages are to Allow and Disallow search engine access. On this site you can learn more about web robots.

Function to get multiple robotstxt files guess_domain. When it comes to robotstxt formatting Google has a pretty strict guideline. Web Robots also known as Web Wanderers Crawlers or Spiders are programs that traverse the Web automatically.

Robotstxt only controls crawling behavior on the subdomain where its hosted. Function to get multiple robotstxt files guess_domain. Storage for http request response objects get_robotstxts.

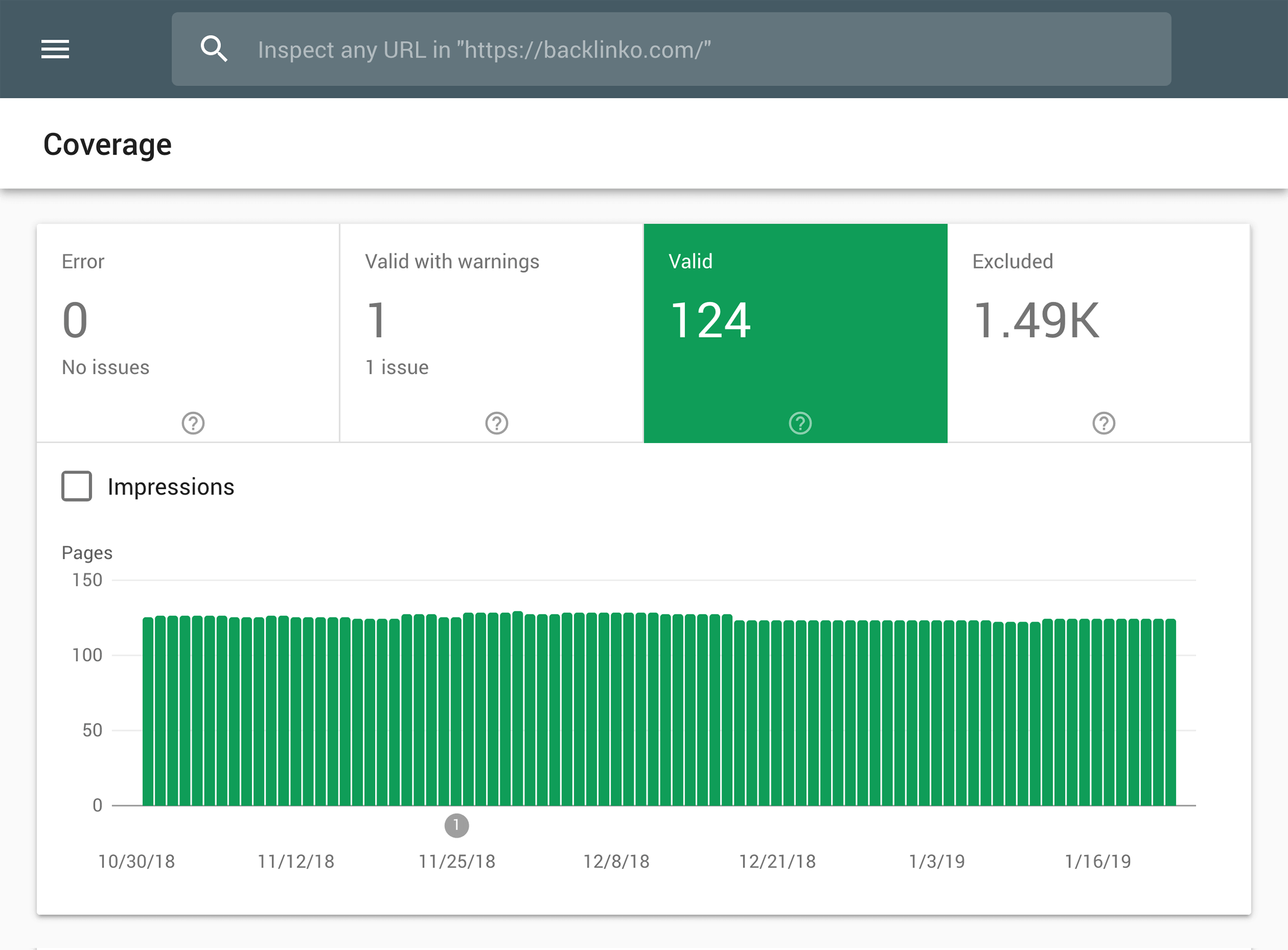

Restarting an R-session will invalidate the cache. Create a robotstxt file. When Google or other search engines come to your site to read and store the content in its search index it will look for a special file called robotstxt.

The Robotstxt Format. The robottxt file also known as the robot removal protocol or standard is a text file that asks web robots mostly search engines to crawl pages on your site. The retrieval of robotstxt files is cached on a per R-session basis.

Robotstxt files are mostly intended for managing the activities of good bots like web crawlers since bad bots arent likely to follow the instructions. I will elaborate one measure by step to edit your robotstxt and then search engines will probably cherish it. Before it visits the target page it will check the robottxt.

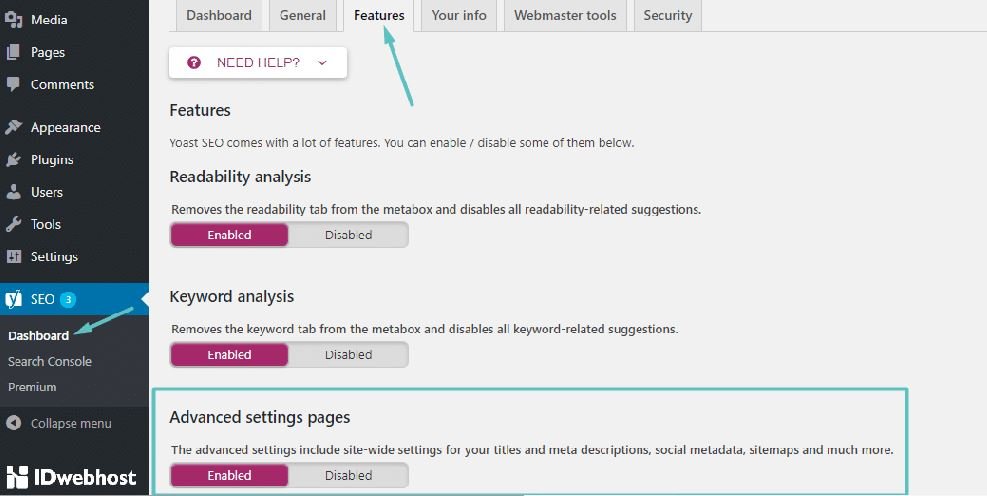

Also using the the function parameter froce TRUE will force the package to re-retrieve the robotstxt. So Im briefly going to explore the ROpenSci robotstxt package by Peter Meissner. The robotstxt file plays a big role in SEO.

The retrieval of robotstxt files is cached on a per R-session basis. This information is often contained in the robotstxt file on a website. Robotstxt is a small file that easily connects your website with almost every web crawler web robots.

I am fairly new to webscraping in R using rvest and one question is whether a site gives permission for scraping. Restarting an R-session will invalidate the cache. Im slowly working on a new R data package for.

The highest priority when it comes to creating a robotstxt file is. If you want to control crawling on a different subdomain youll need a separate robotstxt file. RobotsTxt file is one of the maximum critical files for all websites that need to provide a path to the search engines like google and yahoo regarding their content material.

RobotsTxt generator tool is any other one of the maximum in-demand equipment for bloggers and website owners. Well cover several ways to create a new robotstxt for WordPress in a minuteFor now though lets talk about how to determine what rules. RrobotstxtR defines the following functions.

A robotstxt file contains directives for search engines. After letting you know that what is robotstxt now recognize its importance. If you use a site hosting service such as Wix or Blogger you might not need to or be able to edit your robotstxt file directlyInstead your provider might expose a search settings page or some other mechanism to tell search engines whether or not to crawl your page.

This file is a set of instructions to tell search engines where they can look to crawl content and where they are. Adding the wrong directives here can negatively impact. You can use a robotstxt file for web pages HTML PDF or other non-media formats that Google can read to manage crawling traffic if you think.

This file is included in the source files of most websites. A robotstxt file is a set of instructions for bots. Robotstxt effect on different file types.

Importance of Robotstxt. Robotstxt in short. Only the bot that requests the robotstxt file is allowed full reign over the site.

You can use it to prevent search engines from crawling specific parts of your website and to give search engines helpful tips on how they can best crawl your website. Also using the the function parameter froce TRUE will force the package to re-retrieve the robotstxt file. What is a robotstxt file.

A robotstxt file is used primarily to manage crawler traffic to your site and usually to keep a file off Google depending on the file type. Search engines such as Google use them to index the web content spammers use them to scan for email addresses and they have many other uses. Method aslist for class robotstxt_text fix_url.

Text content of a robotstxt file provides as character vector check_strickt_ascii whether or not to check if content does adhere to the specification of RFC to use plain text aka ASCII. By default it directs all bots to stay away from the entire site but then presents an exception. Downloading robotstxt file get_robotstxt_http_get.

I built a script that dynamically generates a robotstxt file for search engine bots who download the file when they seek direction on what parts of a website they are allowed to index. The Web Robots Pages. In short a Robotstxt file controls how search engines access your website.

Every website is only allowed one robotstxt file and that file has to follow a specific format. Robotstxt provides easy access to the robotstxt file for a domain from R. September 24 2018.

List_merge 9 list_merge Merge a number of named lists in sequential order Description Merge a. Robotstxt is a file used by web sites to let search bots k now if or how the site should be crawled and indexe d by the search engineMany sites simply disallow crawling meaning the site shouldnt be crawled by search engines or other crawler bots. However the robotstxt file WordPress sets up for you by default isnt accessible at all from any directoryIt works but if you want to make changes to it youll need to create your own file and upload it to your root folder as a replacement.

Function guessing domain from path http_domain_changed. Downloading robotstxt file get_robotstxt_http_get. The robotstxt file is part of the robots exclusion protocol REP a group of web standards that regulate how robots crawl the web access and index content.

Cara Optimalkan Robots Txt Untuk Seo Friendly Wordpress

Cara Setting Robots Txt Pada Wordpress Dengan Benar Agoaga

Panduan Lengkap Tentang Cara Setting Robot Txt Di Wordpress

Panduan Lengkap Tentang Cara Setting Robot Txt Di Wordpress

Robots Txt And Seo Digitalgarg

Robots Txt Wordpress Bagaimana Mengoptimalkannya Untuk Seo Anda

File Robots Txt Cara Memasang Dan Formula Konfigurasi Pada Website

Robots Txt And Seo Complete Guide

Cara Mengatasi Peringatan Diindeks Meski Diblokir Oleh Robots Txt Cara Manual

File Robots Txt Cara Memasang Dan Formula Konfigurasi Pada Website

Cara Mengatasi Peringatan Diindeks Meski Diblokir Oleh Robots Txt Cara Manual

Why Google Robots Txt Tester Has Error And It S Not Valid Stack Overflow

Robot Txt The Guide To Optimize It For Your Seo

Posting Komentar untuk "Robots Txt R"